Object Tracking with OpenCV

OpenCV (Open Source Computer Vision) is a library of programming functions mainly aimed at real-time computer vision.[1] Originally developed by Intel,

What is Object Tracking?

Simply put, locating an object in successive frames of a video is called tracking.

Usually tracking algorithms are faster than detection algorithms. The reason is simple. When you are tracking an object that was detected in the previous frame, you know a lot about the appearance of the object.

You also know the location in the previous frame and the direction and speed of its motion.

If you are running a face detector on a video and the person’s face get’s occluded by an object, the face detector will most likely fail. A good tracking algorithm, on the other hand, will handle some level of occlusion.

How to install OpenCV on Mac OS?

Simple, you have probably python installed, so use brew to install opencv.

brew install opencv

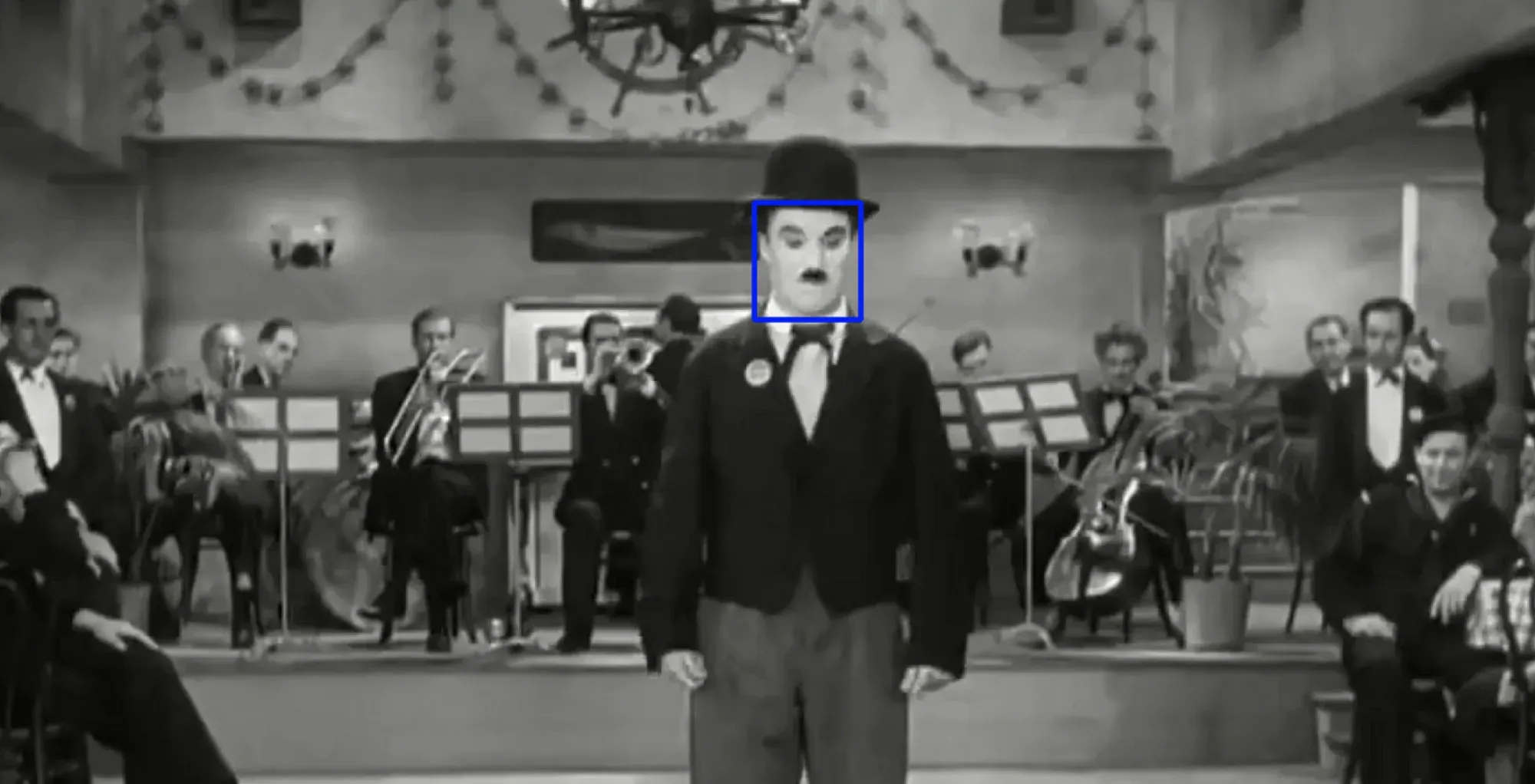

pip3 install numpyThen I used this video which is a short cut of Chaplin for doing object tracking, I am trying to track his face while he is dancing and turning around.

Normally the objects we are tracking would not be disappeared, but in this case for comparing different methods provided by OpenCV, I used this video.

Firstly importing cv2

import cv2Then,

tracker_types = ['BOOSTING', 'MIL','KCF', 'TLD', 'MEDIANFLOW', 'CSRT', 'MOSSE']

By selectROI function of cv2 we can define the box we want to track in the video.

bbox = cv2.selectROI(frame, False)What are the OpenCV Tracker Algorithms?

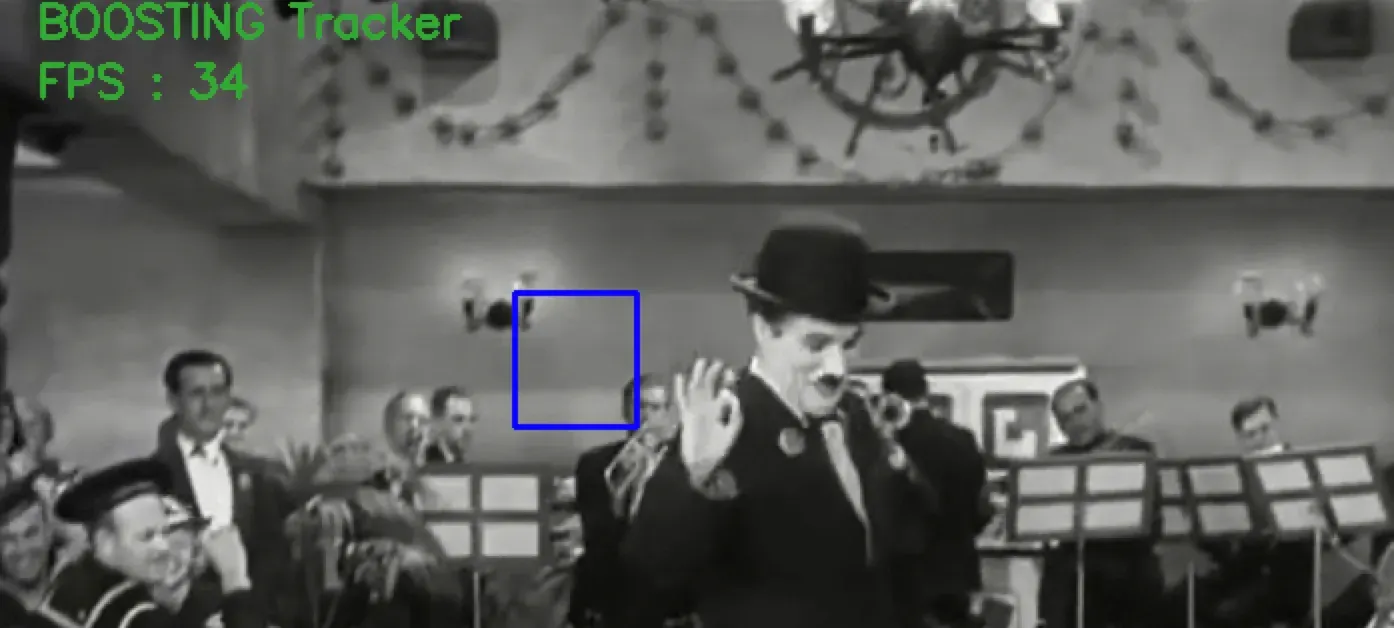

__BOOSTING Tracker

This tracker is based on an online version of AdaBoost — the algorithm that the HAAR cascade based face detector uses internally. This classifier needs to be trained at runtime with positive and negative examples of the object. The initial bounding box supplied by the user ( or by another object detection algorithm ) is taken as the positive example for the object, and many image patches outside the bounding box are treated as the background. Given a new frame, the classifier is run on every pixel in the neighborhood of the previous location and the score of the classifier is recorded. The new location of the object is the one where the score is maximum. So now we have one more positive example for the classifier. As more frames come in, the classifier is updated with this additional data.

Pros : None. This algorithm is a decade old and works ok, but I could not find a good reason to use it especially when other advanced trackers (MIL, KCF) based on similar principles are available.

Cons : Tracking performance is mediocre. It does not reliably know when tracking has failed.

This algorithm fails when Chaplin’s face is disappears for a second.

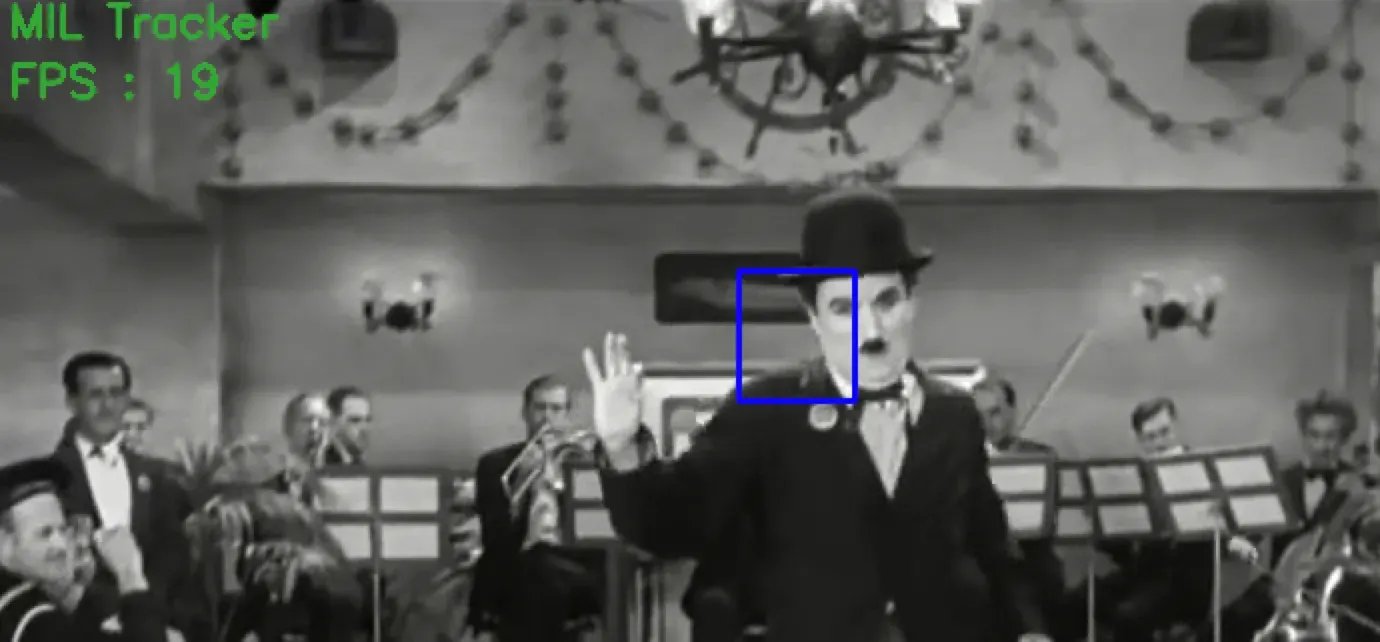

__MIL Tracker

This tracker is similar in idea to the BOOSTING tracker described above. The big difference is that instead of considering only the current location of the object as a positive example, it looks in a small neighbourhood around the current location to generate several potential positive examples. You may be thinking that it is a bad idea because in most of these “positive” examples the object is not centerred.

This is where Multiple Instance Learning ( MIL ) comes to rescue. In MIL, you do not specify positive and negative examples, but positive and negative “bags”. The collection of images in the positive bag are not all positive examples. Instead, only one image in the positive bag needs to be a positive example! In our example, a positive bag contains the patch centerred on the current location of the object and also patches in a small neighbourhood around it. Even if the current location of the tracked object is not accurate, when samples from the neighbourhood of the current location are put in the positive bag, there is a good chance that this bag contains at least one image in which the object is nicely centerred. MIL project page has more information for people who like to dig deeper into the inner workings of the MIL tracker.

Pros : The performance is pretty good. It does not drift as much as the BOOSTING tracker and it does a reasonable job under partial occlusion. If you are using OpenCV 3.0, this might be the best tracker available to you.

Cons : Tracking failure is not reported reliably. Does not recover from full occlusion.

As you can see in the picture below, tracker has lost Chaplin’s face but it is the closet result we can get in OpenCV.

__KCF Tracker

KFC stands for Kernelized Correlation Filters. This tracker builds on the ideas presented in the previous two trackers. This tracker utilizes that fact that the multiple positive samples used in the MIL tracker have large overlapping regions. This overlapping data leads to some nice mathematical properties that is exploited by this tracker to make tracking faster and more accurate at the same time.

Pros: Accuracy and speed are both better than MIL and it reports tracking failure better than BOOSTING and MIL. If you are using OpenCV 3.1 and above, I recommend using this for most applications.

Cons : Does not recover from full occlusion. Not implemented in OpenCV 3.0.

As you in the picture, number of frames per seconds is 409, which means this is the fastest one, however, the tracker would lost the object more often but this algorithm would solve lots of scenarios specially in real time processing.

__TLD Tracker

TLD stands for Tracking, learning and detection. As the name suggests, this tracker decomposes the long term tracking task into three components — (short term) tracking, learning, and detection. From the author’s paper, “The tracker follows the object from frame to frame. The detector localises all appearances that have been observed so far and corrects the tracker if necessary. The learning estimates detector’s errors and updates it to avoid these errors in the future.” This output of this tracker tends to jump around a bit. For example, if you are tracking a pedestrian and there are other pedestrians in the scene, this tracker can sometimes temporarily track a different pedestrian than the one you intended to track. On the positive side, this track appears to track an object over a larger scale, motion, and occlusion. If you have a video sequence where the object is hidden behind another object, this tracker may be a good choice.

Pros : Works the best under occlusion over multiple frames. Also, tracks best over scale changes.

Cons : Lots of false positives making it almost unusable.

__MEDIANFLOW Tracker

Internally, this tracker tracks the object in both forward and backward directions in time and measures the discrepancies between these two trajectories. Minimizing this ForwardBackward error enables them to reliably detect tracking failures and select reliable trajectories in video sequences.

In my tests, I found this tracker works best when the motion is predictable and small. Unlike, other trackers that keep going even when the tracking has clearly failed, this tracker knows when the tracking has failed.

Pros : Excellent tracking failure reporting. Works very well when the motion is predictable and there is no occlusion.

Cons : Fails under large motion.

As you see, the speed over result is acceptable, not as accurate as MIL, and not as fast as KCF.

__MOSSE tracker

Minimum Output Sum of Squared Error (MOSSE) uses adaptive correlation for object tracking which produces stable correlation filters when initialized using a single frame. MOSSE tracker is robust to variations in lighting, scale, pose, and non-rigid deformations. It also detects occlusion based upon the peak-to-sidelobe ratio, which enables the tracker to pause and resume where it left off when the object reappears. MOSSE tracker also operates at a higher fps (450 fps and even more). To add to the positives, it is also very easy to implement, is as accurate as other complex trackers and much faster. But, on a performance scale, it lags behind the deep learning based trackers.

2671 frames, means loosing accuracy but earning more speed. even in normal moving of the object, we can see this algorithms looses the object.

__CSRT tracker

In the Discriminative Correlation Filter with Channel and Spatial Reliability (DCF-CSR), we use the spatial reliability map for adjusting the filter support to the part of the selected region from the frame for tracking. This ensures enlarging and localization of the selected region and improved tracking of the non-rectangular regions or objects. It uses only 2 standard features (HoGs and Colornames). It also operates at a comparatively lower fps (25 fps) but gives higher accuracy for object tracking.

Higher accuracy, a little bit better speed is what CSRT gives us.

Conclusion

If you are looking for solving tracking object in videos, OpenCV is one of the best, there are different algorithms which based on you scenario might work better.

Source Code: https://github.com/ehsangazar/OpenCV-Object-Tracking